The Jetsons debuted this month in 1962. The cartoon depicted a family living a hundred years in the future, 2062. The swooping architectural style, with the quite fun name Googie, serves as the visual language of the future in shows from The Incredibles to Futurama. The everyday gadgetry in the Jetsons foreshadows today’s drones, holograms, moving walkways and stationary treadmills, flat screen televisions, tablet computers, and smart watches.

Remember color television was on the very cutting edge of technology when The Jetsons debuted. This list is impressive. But that smart watch? That last one wasn’t by accident.

The dominant smart watch in 2020 is the Apple Watch, designed by Marc Newson and Jony Ive. In an interview with the New York Times, Marc Newson explained his fascination with the Jetsons lead him into the world of design. “Modernism and the idea of the future were synonymous with the romance of space travel and the exotic materials and processes of space technology. Newson’s streamlined aesthetic was influenced by his Jetsonian vision of the future.” I imagine the first time Newson FaceTimed Jony Ive on an Apple Watch, they felt the future had finally arrived.

Designing the future has constraints that imagining the future lacks.

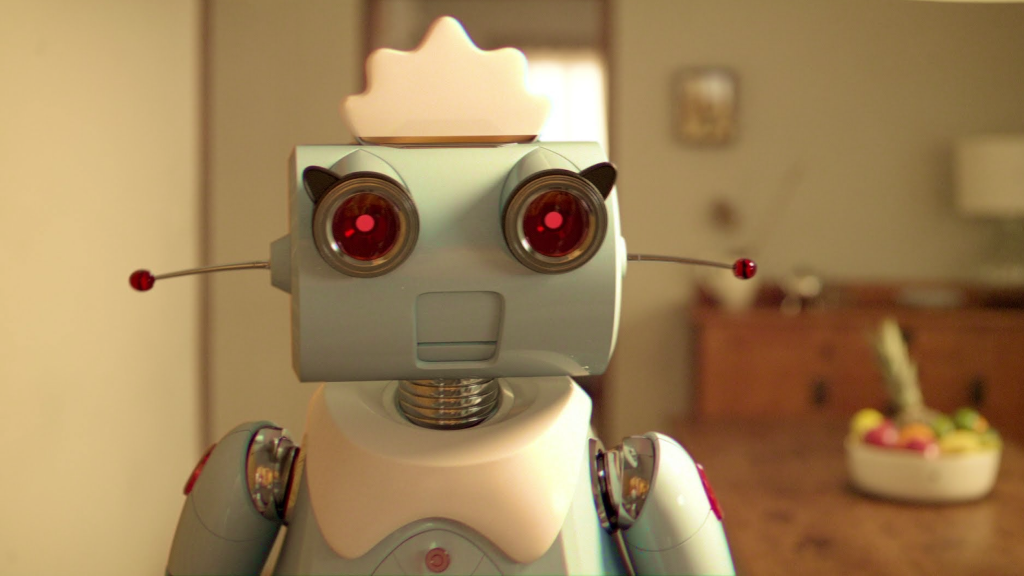

For starters, people and culture constrain innovation. Consider George and his flying car, Elroy and his jetpack, and space tourism. All these are technically feasible in 2020. But I wouldn’t trust a young boy with a jetpack, nor would most of us have money for a trip to the moon. Another constraint is technical complexity. Sure, we have talking dogs. But the reality is much different from the Jetson’s Astro. And yes, we have AI and robotics. But Siri is no R.U.D.I.

When designing future security capabilities and controls, we need to identify and quantify the constrains. One technique for this is the Business Transformation Readiness Assessment. Evaluate factors such as:

- Desire, willingness, and resolve

- IT capacity to execute

- IT ability to implement and operate

- Organizational capacity to execute

- Organizational ability to implement and operate

- More factors here: https://pubs.opengroup.org/…/chap26.html

With this evaluation, we can rank what’s feasible against what’s needed. We can act on areas with momentum (desire, willingness, resolve) and build capabilities that can be maintained. But! There’s one additional step.

We don’t need a robot to push around a vacuum when we have a robot vacuum. We don’t need a full AI/ML deep learning platform when we can have a well-tuned SIEM. Implement security in a minimum viable way.

Identify the constraints. Select the security capability the organization is most ready for. Then build Roombas, not Rosies.

This article is part of a series on designing cyber security capabilities. To see other articles in the series, including a full list of design principles, click here.