Last week at Chicago’s Camp IT, I presented on IT risk management and concluded with focusing on the intersection of risk and action. This is a CIO Centric Approach that reprioritizes risks based on an organization’s constraints and IT capabilities. My Chicago talk led to several good discussions, and this article quickly summarizes the method and how you can apply it to your risk management program.

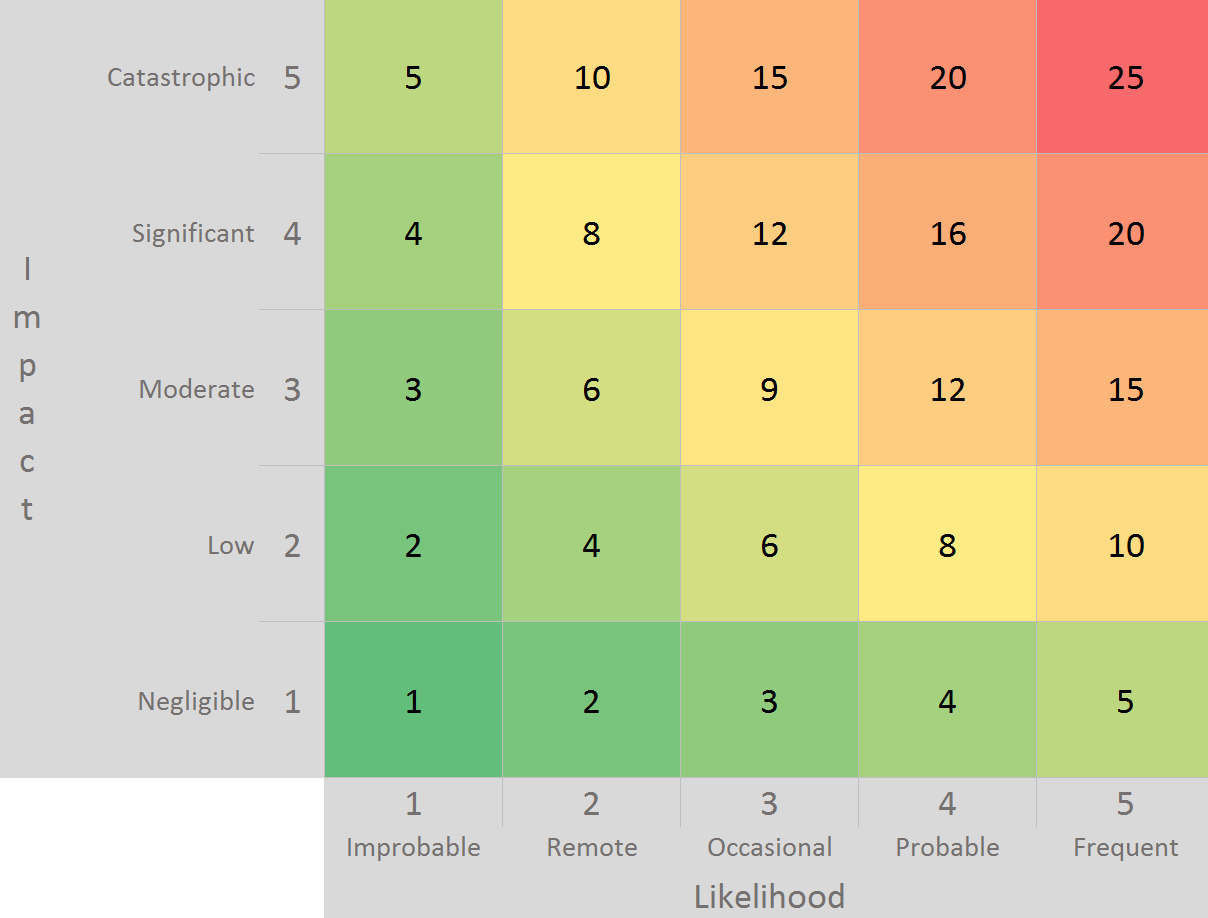

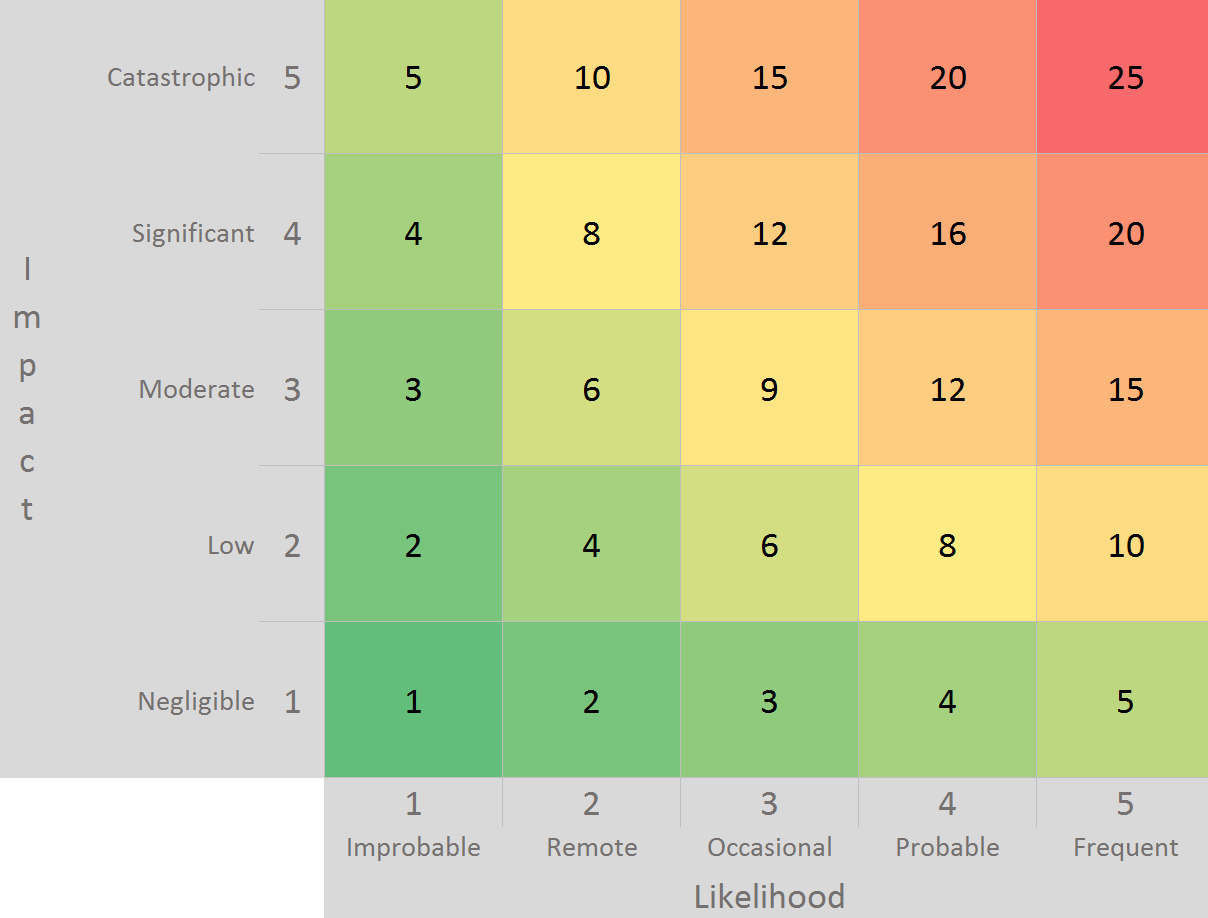

First, let’s briefly recap risk rating by impact and likelihood. This qualitative IT risk management approach enumerates concerns and then assigns a 1-5 score for impact to the organization and the likelihood of the threat being realized. The practicality of such an exercise depends in large part on how the values are derived, with more mature programs using a weighted approach that includes the organization’s mission, objectives, and mandates. Once completed, a risk rating table is generated that compares to the one below.

The advantage, for a security owner, is in immediately seeing which concerns, once mitigated, would produce the largest reduction in the organization’s overall risk. We can then produce the annual audit phonebook with a long laundry list of recommendations.

The disadvantage, for the IT owner, is in not factoring in effort. For example, suppose one risk rated 15 takes 12 months to resolve and another takes 3 months. Yet both are listed side-by-side and prioritized equally by the security owner. The trouble stems from the risk rating exercise not bubbling up quick wins and prioritized actions.

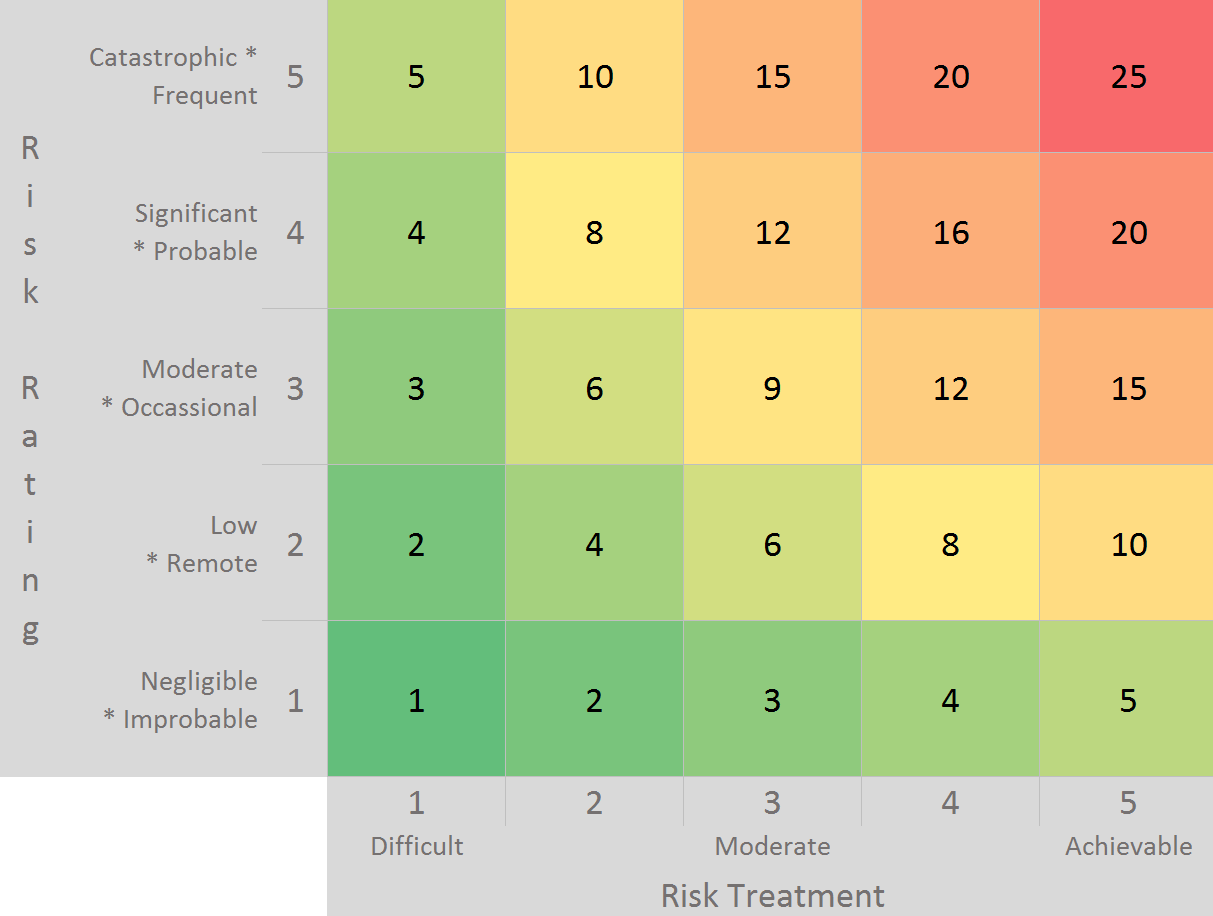

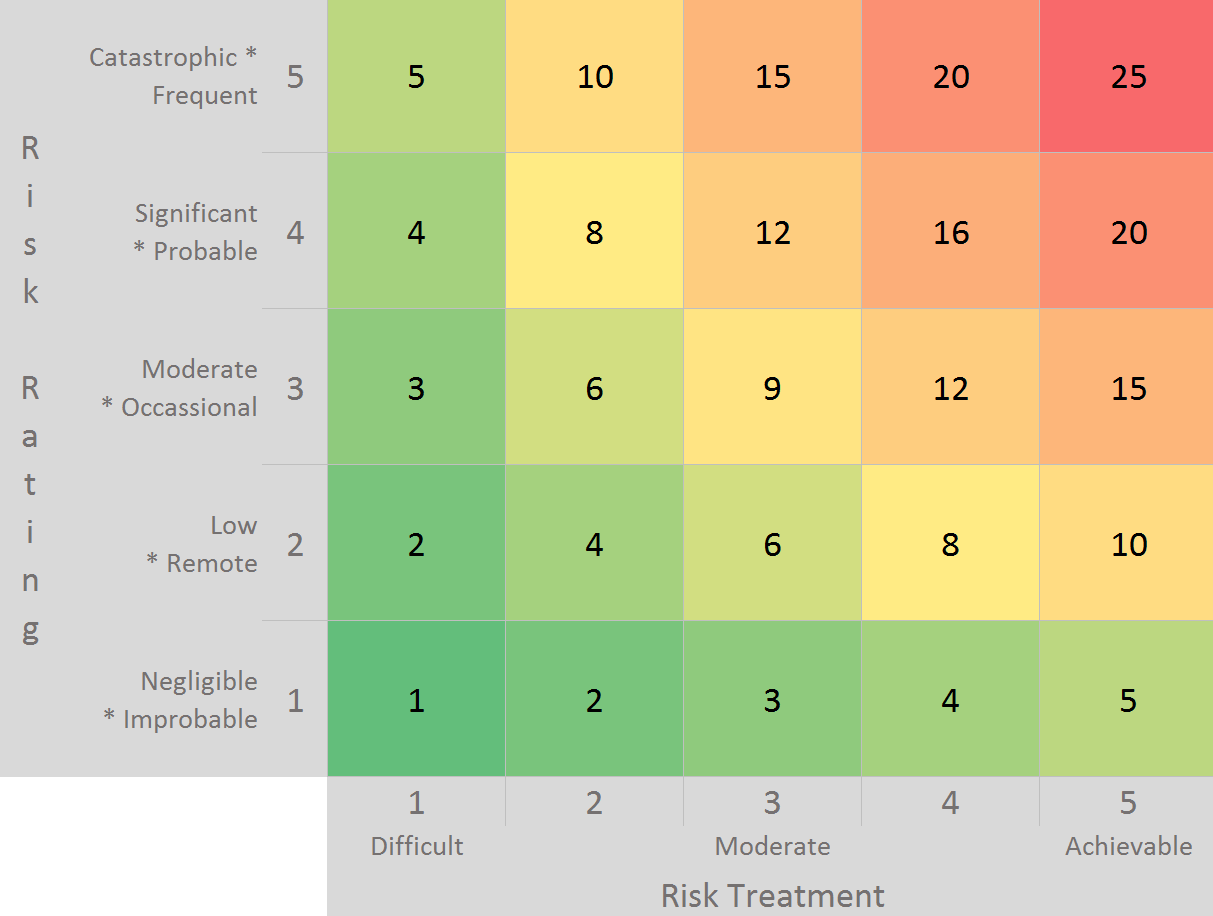

Let’s revisit the risks by looking at constraints and capabilities. First, we brainstorm a list of two or three constraints that would slow the risk treatment process. The list will vary from time to time, and from organization to organization. For the purpose of this article, let’s go with:

- Culture – the current team and organizational culture accepts the change

- Budget – the budget is available to implement the change

Next, let’s list the capabilities. Again, this list will vary. A good starting point is:

- Available staff – the people implementing the change are available and skilled

- Available tech – the technology needed to address the risk is available

- Compliance – the compliance team is engaged in assisting with the change

With this list, we can now weight the impact of each constraint and capability to execute. The weighting is typically developed in a roundtable discussion with the stakeholders. For example, we may decide:

- Culture = 20%

- Budget = 10%

- Available Staff = 35%

- Available Tech = 25%

- Compliance = 10%

With the factors and weights decided, we can talk through each risk treatment. Ranking each one at 1 (difficult), 3 (moderate), or 5 (achievable). The risk treatment score then becomes the weighted average and reflects how actionable the control is. For example:

DSS05.07) Monitor infrastructure for security events

- Culture = 20% = 3

- Budget = 10% = 5

- Available Staff = 35% = 3

- Available Tech = 25% = 5

- Compliance = 10% = 3

3.7 = (20%*3) + (10%*5) + (35%*3) + (25%*5) + (10%*3)

Having reviewed the mitigations, we can plot the risk treatment options along one axis of a chart. We can plot the previously defined risk ratings along the other axis (impact * likelihood / 5). The completed table, shown below, aligns the risks that CISO is concerned about with the areas the CIO has capabilities to address.

Action-oriented IT risk management is a straightforward extension to an assessment that can greatly improve the resulting mitigations. By being CIO Centric and prioritizing based on an organization’s constraints and IT capabilities, we accelerate time-to-value and risk reduction. It’s one more simple way to bridge the gap between audits and results.

Cross posted at CBI: http://content.cbihome.com/blog/cbi-action-oriented-it-risk-management