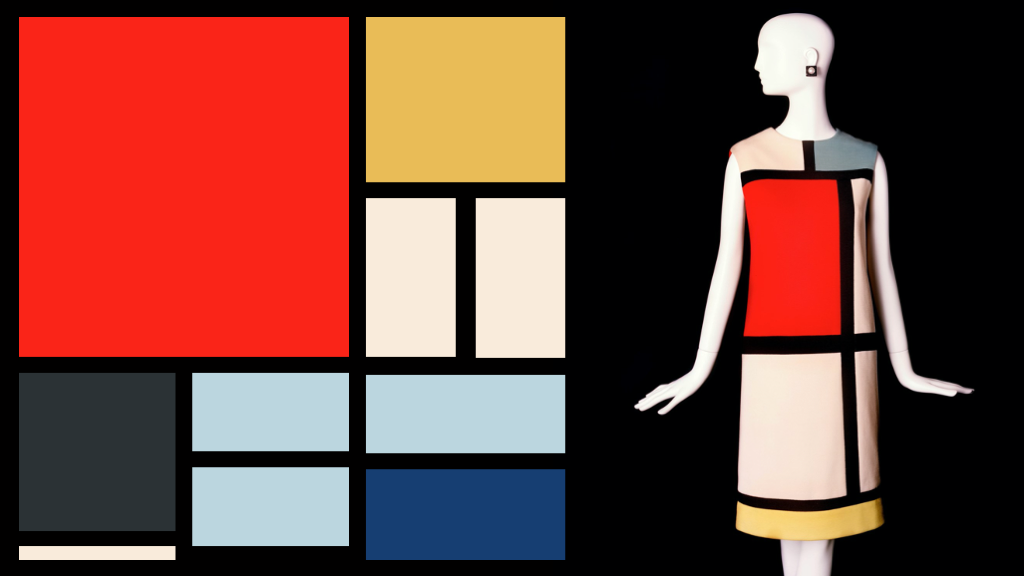

Frameworks fade but security is eternal. Said with apologies to Yves Saint Laurent.

Yves Saint Laurent was a dominant force in fashion from the 1960s through to end of the century. His strengths stemmed from three areas. First, seeing the underlying fundamentals and being able to re-envision them across genders, across times, and across trends. Second, the ability to cross artforms for inspiration, most notably with Piet Mondrian and geometrical shapes. Finally, the ability to reformulate high fashion at couture for mass production. Yves Saint Laurent was the first to open a ready-to-wear line in Paris. He was a designer who mastered how to take the pieces apart and put them back together for new tastes and new markets. It Yves Saint Laurent who once famously said, “fashion fades but style is eternal.”

Last week, we looked at how the adoption of a control — doing something right but rare — has surprising stopping power against common attacks. But the fast-changing early adoption must be balanced with slow-changing fundamentals.

CyberSecurity can be a bit too much like fashion. Every major event, there’s a new trend. The media buzz will say that new threats appear every day. The buzz is that our ways of defending become dated and ineffective as quickly as they’re implemented. New frameworks cry out that the old ways were wrong.

This last bit is particularly on my mind in 2020. A new version of the CIS Critical Security Controls came out late last year. NIST is releasing a new version of its standard for security and privacy controls (NIST SP 500-53B). And the new PCI DSS (Data Security Standard) for credit card security is due any time now. Each framework will be accompanied by a wave of press on how everything has changed. The last version is so last season, and simply won’t do.

But is it? Is it really?

Like style, fundamentals in security remain the same even while the specifics evolve. We need to know our people and our technology. We need visibility into what’s happening and what’s changing. We need to think in terms of lifecycles and act in terms of incidents. We need to make sure the essential habits which result in defensible positions are done regularly. Finally, we need to understand the adversary’s objectives and tactics. From mainframes to data centers to cloud infrastructures to tomorrow, the fundamentals hold true.

A security architecture is comprised of a series of building blocks. Some building blocks should be innovative and ahead of our peers. Most building blocks should do the fundamentals and broadly cover the frameworks.

Do the fundamentals well. Do them consistently. Do them with style.

This article is part of a series on designing cyber security capabilities. To see other articles in the series, including a full list of design principles, click here.